OpenAI’s latest upgrade brings more than just small tweaks.

- Meet the Models: GPT‑4.1, Mini, and Nano

- Instruction Tuning Just Got Better

- Major Gains in Coding

- 1 Million Token Context Is Now Standard

- GPT‑4.1 Mini: Small but Sharp

- Nano: Built for Speed

- Dev Teams Are Already Winning

- Follows Complex Prompts Better

- Frontend Code Just Got Easier

- Better at Images and Videos Too

- Lower Latency Across the Board

- Here’s the Pricing Breakdown

- Final Thoughts: This Is the New Baseline

GPT‑4.1, 4.1 mini, and 4.1 nano are now live—and they’re faster, smarter, and cheaper.

If you care about coding, long documents, or building with AI, this is a big deal.

Let’s break down what changed.

Meet the Models: GPT‑4.1, Mini, and Nano

Three models.

Three different speeds and sizes.

• GPT‑4.1: Full power. Best for coding, long docs, agents.

• GPT‑4.1 Mini: Fast and smart. Great for most apps.

• GPT‑4.1 Nano: Super cheap and fast. Built for light tasks.

Use what fits your build.

Instruction Tuning Just Got Better

GPT‑4.1 is way better at doing exactly what you ask.

It follows formats, avoids what it’s told not to do, and sticks to step-by-step flows.

It also says “I don’t know” when it should.

Why it matters:

Fewer mistakes.

More control.

Way more reliable agents.

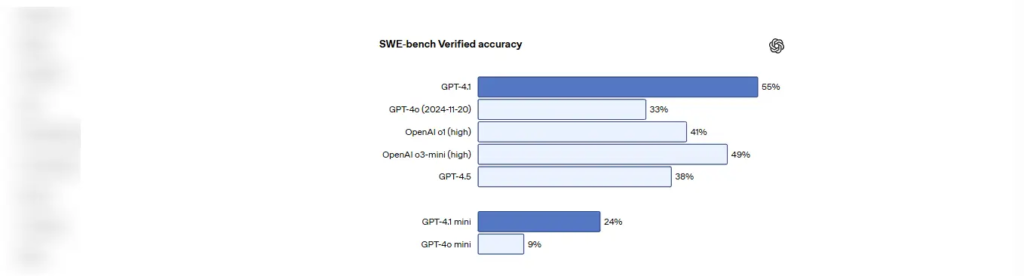

Major Gains in Coding

This update crushes dev tasks.

• Scores 54.6% on SWE-bench Verified (vs. 33.2% for GPT‑4o)

• Writes better frontend code

• Fewer edits, better diffs, cleaner output

Bottom line:

You get code that runs, looks good, and passes tests.

1 Million Token Context Is Now Standard

Yep—GPT‑4.1 supports up to 1 million tokens.

That means it can handle massive codebases, legal docs, research papers—whatever you throw at it.

And it’s not just about size—it actually understands what’s deep in the context too.

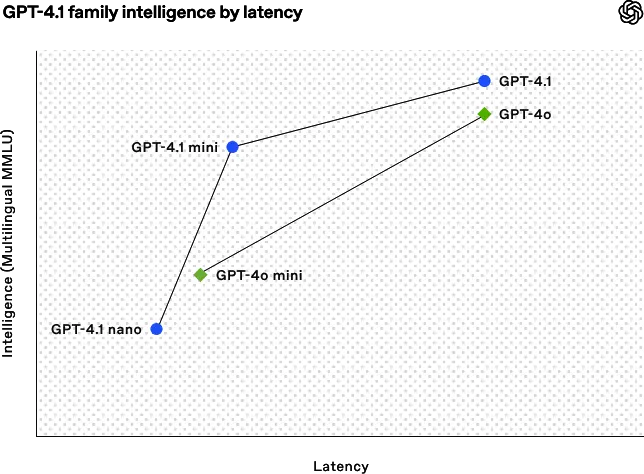

GPT‑4.1 Mini: Small but Sharp

Mini beats GPT‑4o in many benchmarks—and costs 83% less.

It’s fast, smart, and perfect for tools, assistants, or customer-facing apps.

Use it when you want power, without the price tag.

Nano: Built for Speed

GPT‑4.1 Nano is all about fast results.

It’s great for autocomplete, tagging, or short answers.

And it usually responds in under 5 seconds—even with big prompts.

Cheapest, fastest model OpenAI’s ever shipped.

Dev Teams Are Already Winning

Real companies are seeing big results.

• Windsurf: 60% higher code acceptance

• Qodo: More precise code reviews

• Hex: Better SQL analysis, less debugging

These aren’t tests—they’re production results.

Follows Complex Prompts Better

Multi-step prompts? Conditional logic? GPT‑4.1 handles them.

It scored way higher than GPT‑4o on instruction tests like MultiChallenge and IFEval—especially on hard tasks.

It listens better. That’s a big win.

Frontend Code Just Got Easier

Need UI code? GPT‑4.1 has your back.

It writes cleaner HTML, better styled React apps, and smoother animations.

In tests, humans preferred its output 80% of the time.

Better at Images and Videos Too

GPT‑4.1 also improved on visual tasks.

It reads charts, diagrams, and long videos more accurately than GPT‑4o.

It scored higher on image benchmarks like MMMU and Video-MME.

Good news if you’re building multimodal apps.

Used in Legal and Finance Right Now

• Thomson Reuters: More accurate multi-document analysis

• Carlyle: Better at pulling numbers from huge reports

It’s already working in complex, high-trust environments.

Lower Latency Across the Board

Models respond faster—especially mini and nano.

• Nano: Under 5s for most prompts

• Mini: Half the latency of GPT‑4o

• Plus: Prompt caching is now 75% off

Better speed, lower cost. Win-win.

Here’s the Pricing Breakdown

Per 1M tokens:

Model Input Output Cached Input

GPT‑4.1 $2.00 $8.00 $0.50

GPT‑4.1 Mini $0.40 $1.60 $0.10

GPT‑4.1 Nano $0.10 $0.40 $0.025

Prompt caching now gives 75% off input tokens.

Final Thoughts: This Is the New Baseline

GPT‑4.1 isn’t just better—it’s more usable.

It’s built for real products, real teams, and real work.

Even if you care about speed, cost, accuracy—or all three—this update gives you more to work with.

If you’re building with AI, this is your new starting point.